Really easy to create a new post on any blog – the most complex part is to think about the content – you can do it through a couple of clicks on your laptop, or from your phone/tablet, or just reblog other interesting posts from some mates. The same thing happens with OpenStack, you can create Servers instances, routers, subnets, firewalls, VPNs, Load Balancers through just a couple of clicks – well, first of all, you need to plan and figure it why you need to create them, it’s not just create things because you can, depending on the application this virtual infrastructure will support, you need to define what your virtual IT architecture will be –

In my previous note about Neutron I’ve mentioned how Neutron brings Layer 3 capabilities and how easy is to create and manage it. Now I will describe the magic behind, I’m sure you will love it as I do. All this thing about Software Defined Network is been fully applied with OpenStack Neutron and its most used third party Plug-in: OpenVSwitch

First of all, I want to mention there’s a lot of information from vendors and community contributors:

- Networking in too much detail from RedHat

- Networking Docs at OpenStack

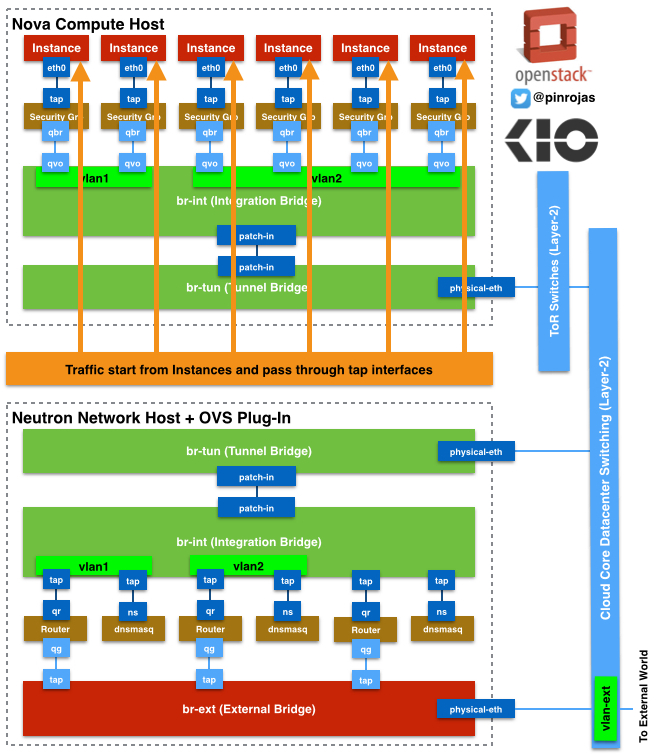

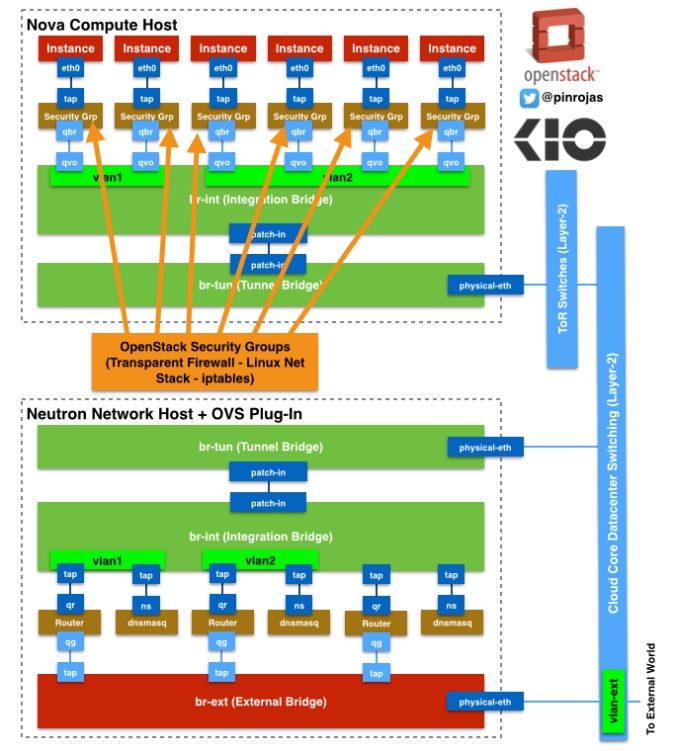

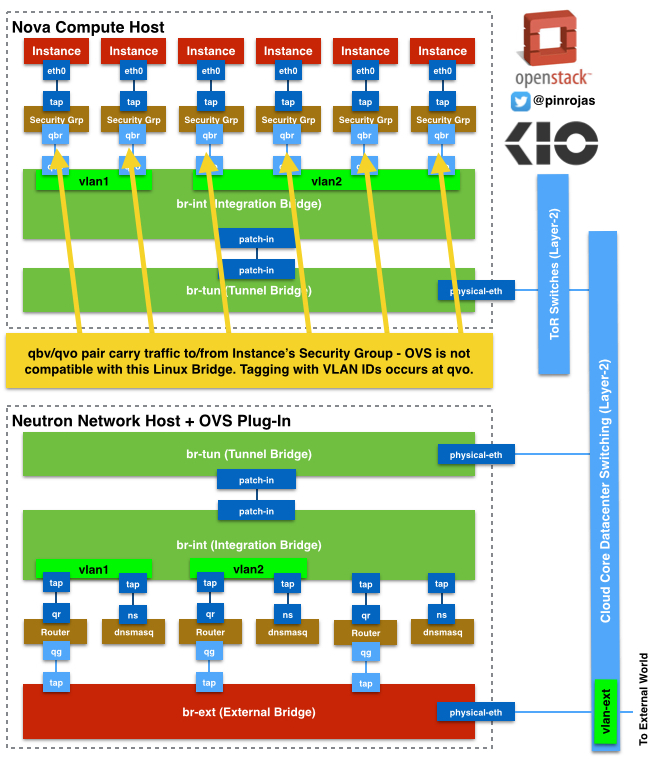

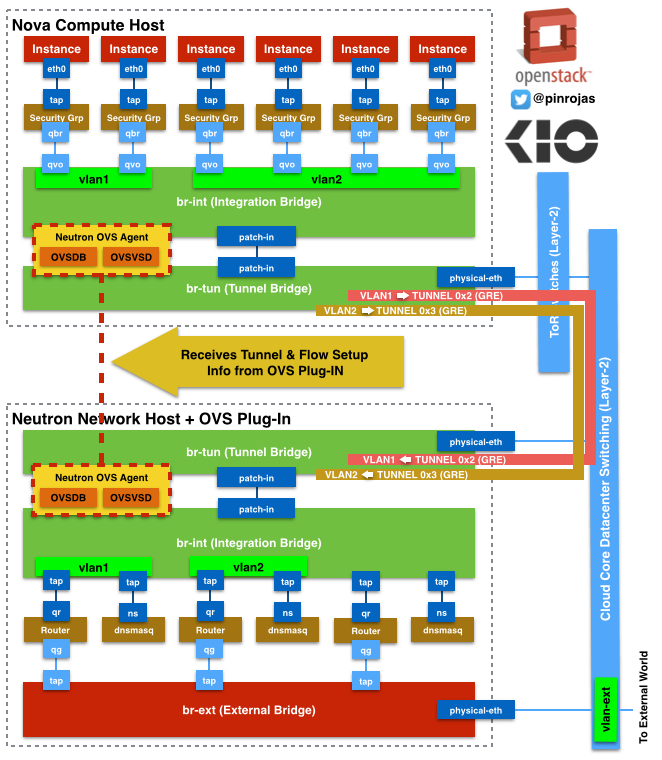

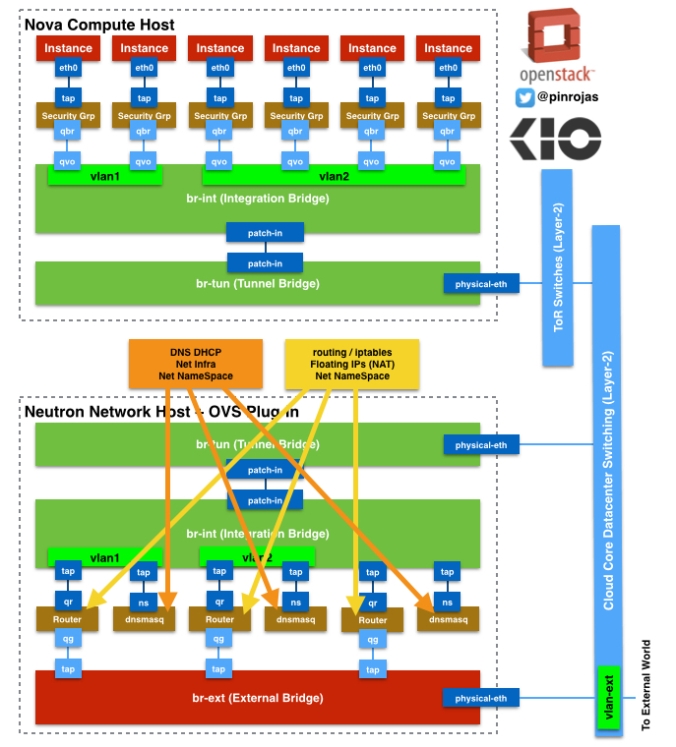

Nova Compute Host

Let’s start describing what is happening inside a Nova Compute Host with Neutron and OpenVSwitch. You have the instances where all the traffic ends or starts. These instances have ethernet virtual interfaces attached called tap devices and where these instances define logical interfaces as an “eth0“. these tap devices are directly connected to a Linux Bridge where OpenStack Security Groups are defined through iptables. This linux bridge acts like a transparent firewall to every instance – you have only one security group associated to only one instance -. A linux bridge are processes that include iptables running inside an associated Linux Network Stack – A Network stack includes the minimal network resources to be able to connect through layer 3 or 2 to any other system –

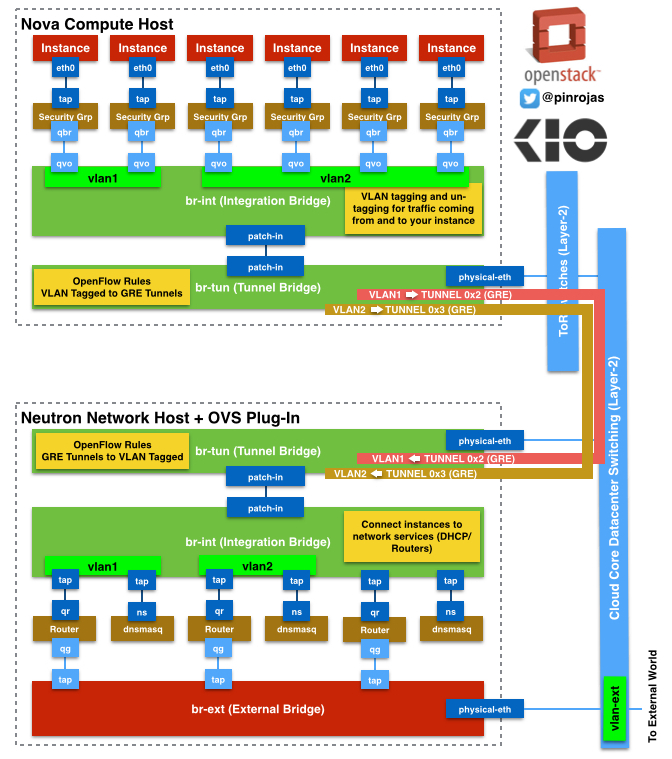

OpenVSwitch Bridges are not compatible to this Linux Bridge, then we’ve defined on our architecture a pair or interfaces called qbr and qvo. qbr is directly connected to the Linux Bridge and qvo is connected to the Integration Bridge. The Integration Bridge is part of the OpenVSwitch solution and it’s responsible to tag and un-tag with VLAN IDs all network traffic come in and out from/to the instances. Also is responsible to communicate all the instances that belong to the same tenant or lead the traffic out the Tunnel Bridge and then to external network components.

Tunnel Bridge (br-tun) is connected to the Integration Bridge (br-int) through the patch-in interfaces. The OpenFlow rules about how to setup the traffic in the tunnels to run on top of the br-tun. the tunnels are defined through GRE and there is an association between every VLAND ID and a GRE Tunnel ID.

Top of Rack and Core Cloud Switching

All Hosts (controller, compute, network, etc.) are connected to a core cloud network compose by high-performance switches. The good thing about this architecture controlling all data through virtual resources is all these switches are just providing transportation through layer 2. You don’t need VLANs more than the main ones to split the traffic between internal and external frames – probably no more than couple of these ones-

You don’t need advanced licenses for Routing, GRE, Security… all these components are provided by Neutron and OpenVSwitch. There isn’t lock-in with any vendor – at least you start using some specific plug-ins to manage some control features by hardware or specific appliances -. Then, you are able to use product for different known vendors that can offer a powerful data backplane to transport an big amount of data with the lowest latency.

Neutron Network Host

Neutron Host or Hosts are independent servers where all the more advanced network service and components are running (Routers, DHCP, External Firewalls, etc.). All the traffic to the External Network (or Internet) through the GRE tunnels from the Nova Servers ends at the br-tun defined by Neutron Hosts. br-tun has the OpenFlow rules that define the match between VLAN IDs and GRE Tunnels IDs, and all this conversion processes, between VLAN and GRE, occurs at this bridge. This Bridge is connected to the integration bridge (br-int) inside Neutron through the patch-in interfaces.

As the Host Node, br-int is un-tagging and tagging the traffic, but in this case, to/from routers and dnsmasq resources. routers and dnsmasq are independent namespaces with their own Linux Network Stack. Namespaces helps to manage traffic with overlapping private IPs between different routers associated to different tenants or projects. Routers helps to route traffic between tenant’s subnets and also to/from the external World. Also routers are using Linux iptables to filter traffic and also to make floating IP works through NAT (Network Address Translation) to chosen instances. dnsmasq is also working on a Linux Network Stack with DHCP and DNS processes serving exclusively to the associated tenant.

Important tips to design Neutron+OVS Architectures

Neutron is containing router, basic/advanced network and security services, then almost all data goes through these hosts. size these servers with as much memory you can. Memory is the most used resource for this processes. Consider to have at least two server with equal configuration, to use one of them as stand-by for any issue on the main one.

It’s really important to consider high performance switches, a big amount of data is transported between nodes, use at least two levels design for cloud switching and manage the traffic trying to keep instances of the same tenant in the same cabinet – almost all the traffic will be keep it inside the Top of Rack Switch –

Well, this is it, see you next time!

Reblogged this on CHANGE as a Service and commented:

Software Defined Networks in Action with OpenStack Neutron

What a great post, amazing! Congratulations.

awsome article

Pingback: Openstack: More advanced ways to control your coming-in traffic with Neutron | Your Advantage is Temporary

Excellent !! Really very good artical . Diagrams are Awesome. If possible can Can you share the coding flow of this .

Reblogged this on Cloud With Ubuntu and commented:

neutron! (network)

Pingback: Cutting-edge cloud tech is not based exclusively on #OpenStack | Your Advantage is Temporary

Awesome information.. cleared confusion regarding OVS and Neutron..

Pingback: There’s Real Magic behind OpenStack Neutron | Your Advantage is Temporary | IngloriousDevOps

Great article. Helps figure out how network traffic is handled. Thanks a lot

Thank you for a great post.

Best blog of OpenStack networking… thanks

Thanks for great post and diagram, make me easier to understand tunnel implementation in openstack

Pingback: #OpenStack Kilo/Juno – L3 High Availability VRRP for #Neutron | Tricky Deadline

Pingback: #Neutron and @AristaNetworks – Routing and #VXLAN encapsulation at wire speed | Tricky Deadline

Pingback: OpenDayLight focus in OpenStack Networks and Lithium is the proof | Tricky Deadline

Pingback: OpenDayLight focuses on OpenStack Networks and Lithium is the proof | Tricky Deadline

Reblogged this on My Blog.

very clearly explained….

Thanks for this detailed explanation of Neutron’s components. Over at VirTool Networks we have been working hard to lower the bar for understanding OpenStack networking with our visualization and troubleshooting tool. We would love you to have a look and see what you think as we are committed to making life much easier for OpenStack users!