We are moving out! An updated post can be found here:

Let’s say you need a more scalable layer-2 solution for your VMs and containers. You can do some Linux bridges or maybe use OVS and try some VxLAN/GRE encapsulation between Hypervisors. Containers is a different kind of animal though: Way more end-points in every server and we put them across the datacenter, it could turn into the worst nightmare if you need to troubleshoot it.

DevOps or IT guys, that normally try the network as a black box to connect end-points. And still think that hardware appliances can be replace for modules in the kernel (I used to be one of them). It’s important also to say that sort of thought could fit in Enterprise use cases, but a Telco cloud, VNFs, CNFs, is in other league.

Calico is great solution that work over pure Layer-3 approach. Why? Well, they say that “Layer 2 networking is based on broadcast/flooding. The cost of broadcast scales exponentially with the number of hosts”. And yes, they are absolutely right… if you are not putting something like EVPN (RFC 7209) to help you out on building Layer-2 services of course.

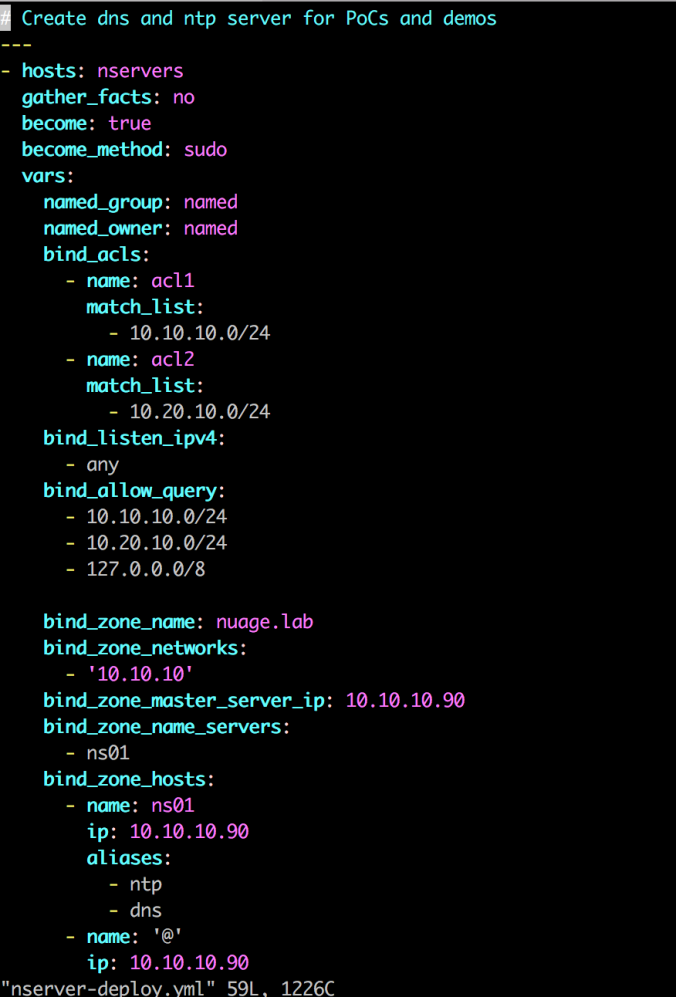

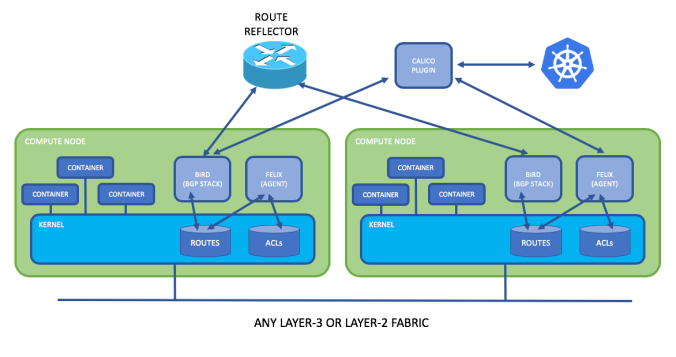

Calico Kubernetes Architecture

EVPN don’t rely on flooding and learning like other technologies, actually uses control plane to advertise MAC addresses across different places. They can say: “Well, you will be using encapsulation that also have an important cost”… Well, yes and no. EVPN can use different data planes actually (i.e. MPLS). Maybe Nuage uses VXLAN, but it’s not limited by the technology to use other sort of transportation.

Also, scale can kill any good idea. Like rely on Linux kernel modules and services to route all communications in the datacenter. Try every server as a router? Create security policies for every end-point in the DC? Uff! For example Calico took the idea from the biggest network ever known today. Yes! the Internet. But it doesn’t mean this network is optimal. Many companies needs to rely on MPLS or other sort of private WAN services for higher demand in security and performance.

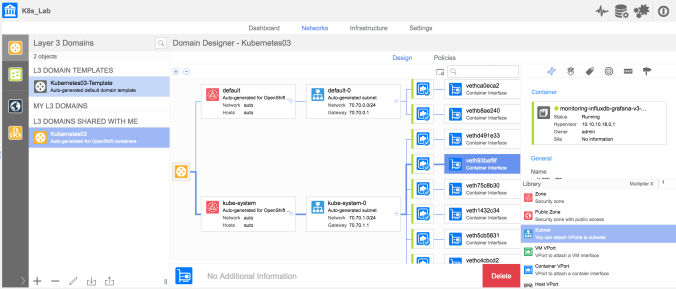

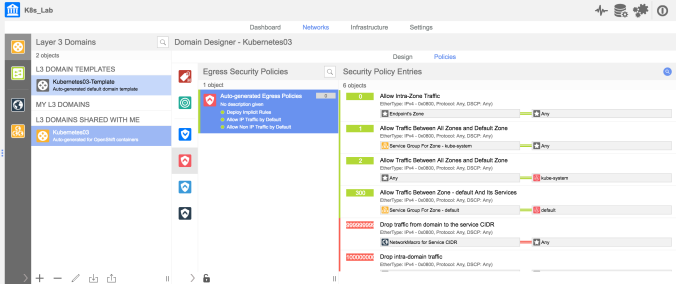

Nuage and Kubernetes Architecture

Layer-2 services help to simplify network design and security. Some Layer-2 domains don’t need to connect to any Layer-3 for security reasons. Also, Layer-2 domains in the datacenter can be directly attached to a Layer-2 service in the WAN (i.e. VPLS, MPLS). We can add many more things on the list like Private LTEs, Network slicing…

EVPN benefits

EVPN came as an improved model from what is learnt in MPLS/VPLS operations. Also, it’s a join work of many vendors as Nokia (former ALU), Juniper and Cisco. MPLS/VPLS relies on flooding and learning to build Layer2 forwarding database (FDB). EVPN introduced a new model for Ethernet services. EVPN uses Layer-3 thru MP-BGP, as a new delivery model, to distribute MAC and IP routing information instead of flooding and learning.

In summary key benefits we can export to CNF/VNFs use cases:

- Scalability: Suppress unknown unicast flooding since all active MACs and IPs are advertised by the leaf or the software router you have installed in the hypervisor.

- Peace of mind: Network admin will have a better control on how the cloud instances escales avoiding issues regarding flooding or loops or MAC mobility/duplications. And cloud admin will keep provisioning and moving around instances with minimal concern o the impact they can cause on the network and reducing the overhead regarding the setup of layer-2 services.

- Workload mobility: If local learning is used, software routers can not detect that MAC address has been moved to other Hypervisor or Host. EVPN uses a MAC mobility sequence number to select always the highest value and advertise rapidly any change. Also the local software router to the Hypervisor will always respond to ARP request for the Default Gateway, that avoids tromboning traffic across remote servers after a MAC moves.

- Ready to work with IPv6. EVPN is ready to manage IPv4 and IPv6 in the control and data plane.

- Industry standard: software router can be directly integrated with Layer-2 services to the WAN thru Datacenter Gateways efficiently advertising MAC and IP routing from VM and containers. Some VNF/CNF are very complex communication instances that require to work directly with ethernet services. It can be seen as a no better seamless and standard solution than EVPN. Some of you can tell me Segment routing can be a better fit… Agree. Great material for “later” post though.

- Resiliency: Multi-homing with all active forwarding, load balancing between PEs. Don’t waste bandwidth with active and standby link. You can create a BGP multi-homed subnet to the datacenter gateway (DCGW) form any virtual workload.

Reference and Source

Info from Calico: https://www.projectcalico.org/why-calico/

Ethernet VPN (EVPN) – Overlay Networks for Ethernet Services: