Update – August 1st, 2016: I’ve made an update thanks to @karkull feedback. some changes to neutron.cfg and nova.cfg files. I’ve made important changes the way I was presenting the info over this post.

Hi there,

Through this post, I will install nested packstack liberty with a controller/network node and a nova compute node. Then, I will install the Nuage plugin for neutron and the metadata, heat, horizon files. Also I will install our VRS (Virtualized Routing and Switching) replacing the OVS instance.

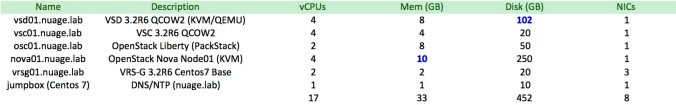

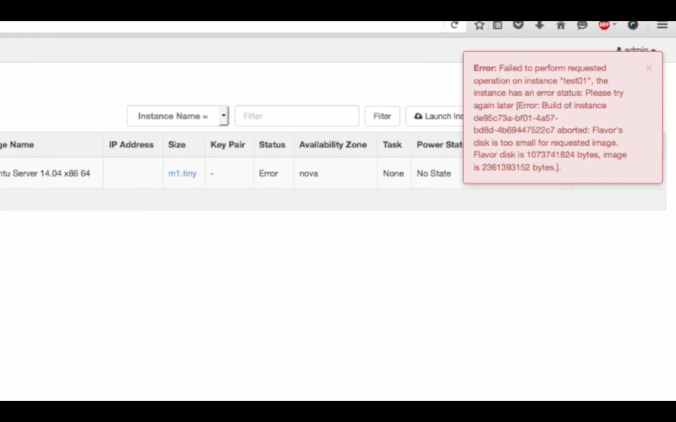

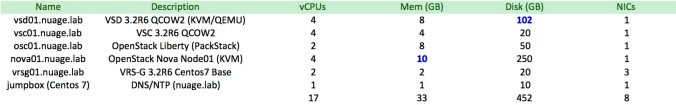

I’ve done some changes from my last post. I’ve created a couple new flavors: nuage.osc.2 and nuage.nova.2. Reason: I’ve got some issues with the memory capacity into the OpenStack Controller. Since now, replace flavors nuage.osc and nuage.nova with those:

[root@box01 ~(keystone_admin)]# openstack flavor create --ram 10240 --disk 250 --vcpus 4 --public nuage.nova.2

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 250 |

| id | 4e191554-25f9-4ce7-bb1b-80941d6ab839 |

| name | nuage.nova.2 |

| os-flavor-access:is_public | True |

| ram | 10240 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 4 |

+----------------------------+--------------------------------------+

[root@box01 ~(keystone_admin)]# openstack flavor create --ram 8192 --disk 50 --vcpus 4 --public nuage.osc.2

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 50 |

| id | a98464a5-1008-45bb-972d-7997cc2f0df3 |

| name | nuage.osc.2 |

| os-flavor-access:is_public | True |

| ram | 8192 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 4 |

+----------------------------+--------------------------------------+

Our new list of instances will be now:

OpenStack Controller

I will install an OpenStack controller/network with the services: neutron, horizon, heat, nova, keystone and glance. And a nova compute server with KVM.

Let’s start creating the server

[root@box01 ~]# . keystonerc_nuage

[root@box01 ~(keystone_nuage)]# openstack server create --image centos7-image --flavor nuage.osc.2 --key-name pin-laptop --nic net-id=nuage-lab,v4-fixed-ip=192.168.101.6 osc01

+--------------------------------------+----------------------------------------------------------+

| Field | Value |

+--------------------------------------+----------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | fdqWisumw9tB |

| config_drive | |

| created | 2016-05-23T17:15:20Z |

| flavor | nuage.osc.2 (a98464a5-1008-45bb-972d-7997cc2f0df3) |

| hostId | |

| id | 859bfab9-6547-471f-b83f-73b7997a2b7f |

| image | snap-160519-osc01 (6082c049-a98d-4fa3-87be-241e08ea0b19) |

| key_name | pin-laptop |

| name | ocs01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| project_id | 39e2f35bc10f4047b1ea77f79559807d |

| properties | |

| security_groups | [{u'name': u'default'}] |

| status | BUILD |

| updated | 2016-05-23T17:15:20Z |

| user_id | c91cd992e79149209c41416a55a661b1 |

+--------------------------------------+----------------------------------------------------------+

I will add a floating IP 192.168.1.30 to get access from my home network to our osc01.

openstack ip floating create external_network

openstack ip floating add 192.168.1.30 ocs01

Let’s proceed preparing our controller and installing PackStack now.

OpenStack Controller: disable selinux and update

Let’s disable selinux to save resources.

[root@ocs01 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=DISABLED

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@ocs01 ~]# vi /etc/grub2.cfg

change /etc/grub2.conf and reboot. See an extract of the file over the following:

### BEGIN /etc/grub.d/10_linux ###

menuentry 'CentOS Linux (3.10.0-327.13.1.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-327.13.1.el7.x86_64-advanced-8a9d38ed-14e7-462a-be6c-e385d6b1906d' {

load_video

set gfxpayload=keep

insmod gzio

insmod part_msdos

insmod xfs

set root='hd0,msdos1'

if [ x$feature_platform_search_hint = xy ]; then

search --no-floppy --fs-uuid --set=root --hint='hd0,msdos1' 8a9d38ed-14e7-462a-be6c-e385d6b1906d

else

search --no-floppy --fs-uuid --set=root 8a9d38ed-14e7-462a-be6c-e385d6b1906d

fi

linux16 /boot/vmlinuz-3.10.0-327.13.1.el7.x86_64 root=UUID=8a9d38ed-14e7-462a-be6c-e385d6b1906d ro console=tty0 console=ttyS0,115200n8 crashkernel=auto console=ttyS0,115200 LANG=en_US.UTF-8 selinux=0

Update your system thru “yum -y update”. Set you timezone (My case is US/Central): sudo ln -s /usr/share/zoneinfo/US/Central /etc/localtime. You may need to delete /etc/localtime first.

OpenStack Controller: Configure NTP Server

Add you jumpbox server into the /etc/ntp.conf file as following (jus showing an extract of the file)

[root@ocs01 ~]# yum -y install ntp

Loaded plugins: fastestmirror

#

# some boring lines

# more boring lines

#

Installed:

ntp.x86_64 0:4.2.6p5-22.el7.centos.1

Dependency Installed:

autogen-libopts.x86_64 0:5.18-5.el7 ntpdate.x86_64 0:4.2.6p5-22.el7.centos.1

Complete!

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server jumpbox.nuage.lab iburst

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

Synchronize time services as the following:

[root@ocs01 ~]# service ntpd stop

Redirecting to /bin/systemctl stop ntpd.service

[root@ocs01 ~]# ntpdate -u jumpbox.nuage.lab

16 May 19:49:30 ntpdate[11914]: adjust time server 192.168.101.3 offset 0.018515 sec

[root@ocs01 ~]# service ntpd start

Redirecting to /bin/systemctl start ntpd.service

[root@ocs01 ~]# ntpstat

synchronised to NTP server (107.161.29.207) at stratum 3

time correct to within 7972 ms

polling server every 64 s

OpenStack Controller: pre-tasks to packstack installation

Install packstack running “yum install -y https://repos.fedorapeople.org/repos/openstack/openstack-liberty/rdo-release-liberty-2.noarch.rpm” and then “yum install -y openstack-packstack”

I’ve created a snap from it to use it later. take a look the following:

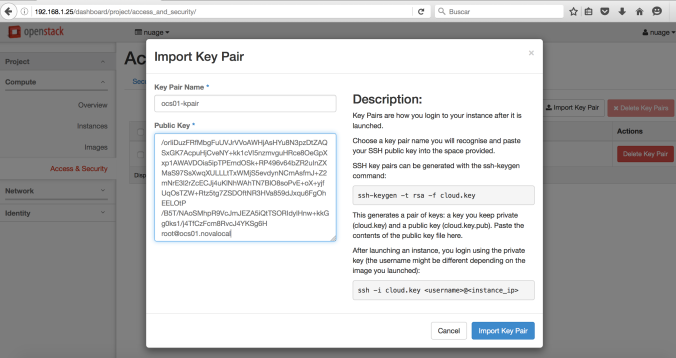

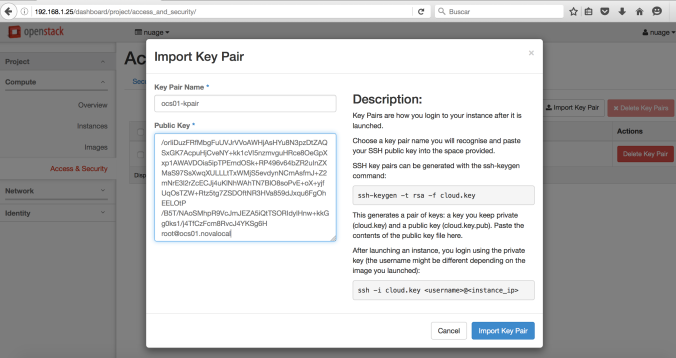

Now use “ssh-keygen” to generate your key pair into the controller:

[root@ocs01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

f8:d1:79:50:3d:4d:e6:2c:6c:13:e4:86:65:21:0e:c4 root@ocs01.novalocal

The key's randomart image is:

+--[ RSA 2048]----+

| oo oo*+o|

| E+ Bo=.|

| . o B.o|

| . . o o o |

| . S o . |

| . . . |

| . |

| |

| |

+-----------------+

[root@ocs01 ~]# cd .ssh/

[root@ocs01 .ssh]# cat id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDL/k1URNcPeTG3NZJENPloueh/orIiDuzFRfMbgFuUVJrVVoAWHjAsHYu8N3pzDtZAQSxGK7AcpuHjCveNY+kk1cVI5nzmvguHRce8OeGpXxp1AWAVDOia5ipTPEmdOSk+RP496v64bZR2uInZXMaS97SsXwqXULLLtTxWMjS5evdynNCmAsfmJ+Z2mNrE3l2rZcECJj4uKlNhWAhTN7BlO8soPvE+oX+yjfXqOsTZW+Rtz5tg7ZSDOftNR3HVa859dJxqu6FgOhEELOtP/B5T/NAoSMhpR9VcJmJEZA5iQtTSORIdylHnw+kkGg0ks1/j4TfCzFcm8RvcJ4YKSg6H root@ocs01.novalocal

Create a new key-pair for your OpenStack Controller importing the public key as following:

Compute Node

We’ll use our snap from the controller as following (don’t forget use keystone_nuage for credentials). Switch to box01 to create the servers.

[root@box01 ~(keystone_nuage)]# openstack server create --image snap-osc01-160516-packstack-pkg --flavor nuage.nova.2 --key-name osc01-kpair --nic net-id=nuage-lab,v4-fixed-ip=192.168.101.7 nova01

+--------------------------------------+-----------------------------------------------------------+

| Field | Value |

+--------------------------------------+-----------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | GTbBa5A6JxzS |

| config_drive | |

| created | 2016-05-23T17:23:55Z |

| flavor | nuage.nova.2 (4e191554-25f9-4ce7-bb1b-80941d6ab839) |

| hostId | |

| id | c0f78a72-e304-4292-8620-c0581a9e6aa8 |

| image | snap-160519-nova01 (958f0ed6-b186-4a72-a662-df78c3ab78b8) |

| key_name | osc01-kpair |

| name | nova01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| project_id | 39e2f35bc10f4047b1ea77f79559807d |

| properties | |

| security_groups | [{u'name': u'default'}] |

| status | BUILD |

| updated | 2016-05-23T17:23:56Z |

| user_id | c91cd992e79149209c41416a55a661b1 |

+--------------------------------------+-----------------------------------------------------------+

Some minutes later, go back to osc01. Check the connection to nova server from your OpenStack Controller and add the public key in /root/ssh/authorized_host

[root@ocs01 ~]# ssh centos@192.168.101.7

The authenticity of host '192.168.101.7 (192.168.101.7)' can't be established.

ECDSA key fingerprint is aa:31:dd:ab:9a:08:3d:7a:23:93:71:97:e1:fb:15:6b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.101.7' (ECDSA) to the list of known hosts.

Last login: Mon May 16 19:38:42 2016 from 192.168.1.66

[centos@nova01 ~]$

[centos@nova01 ~]$ sudo vi /root/.ssh/authorized_keys

#

# add public OCS's public key

#

[centos@nova01 ~]$ exit

logout

Connection to 192.168.101.7 closed.

[root@ocs01 ~]# ssh 192.168.101.7

Last login: Tue May 17 18:12:23 2016

[root@nova01 ~]#

IMPORTANT: Add this public key even into /root/.ssh/authorized_keys at ocs01 server

Sync NTP after you get a clean access to nova01 as root user:

[root@nova01 ~]# ntpdate -u jumpbox.nuage.lab

17 May 18:17:38 ntpdate[9205]: adjust time server 192.168.101.3 offset 0.018297 sec

[root@nova01 ~]# service ntpd start

Redirecting to /bin/systemctl start ntpd.service

[root@nova01 ~]# ntpstat

synchronised to NTP server (192.168.101.3) at stratum 4

time correct to within 8139 ms

polling server every 64 s

PackStack Installation: Using answer file to install both servers

Install packstack now from the controller (osc01) changing the compute to nova01 in the answer file. First, create the answer file

[root@ocs01 ~]# packstack --gen-answer-file=/root/answer.txt

[root@ocs01 ~]# vi answer.txt

Change the following parameters:

CONFIG_CONTROLLER_HOST=192.168.101.6

CONFIG_COMPUTE_HOSTS=192.168.101.7

CONFIG_NETWORK_HOSTS=192.168.101.6

CONFIG_PROVISION_DEMO=n

CONFIG_CINDER_INSTALL=n

CONFIG_SWIFT_INSTALL=n

CONFIG_CEILOMETER_INSTALL=n

CONFIG_NAGIOS_INSTALL=n

CONFIG_NTP_SERVERS=192.168.101.3

Now, execute “packstack –answer-file=/root/answer.txt”

[root@ocs01 ~]# packstack --answer-file=/root/answer.txt

Welcome to the Packstack setup utility

The installation log file is available at: /var/tmp/packstack/20160517-184422-KxwSmh/openstack-setup.log

Installing:

Clean Up [ DONE ]

Discovering ip protocol version [ DONE ]

Setting up ssh keys [ DONE ]

Preparing servers [ DONE ]

Pre installing Puppet and discovering hosts' details [ DONE ]

Adding pre install manifest entries [ DONE ]

Installing time synchronization via NTP [ DONE ]

Setting up CACERT [ DONE ]

Adding AMQP manifest entries [ DONE ]

Adding MariaDB manifest entries [ DONE ]

Fixing Keystone LDAP config parameters to be undef if empty[ DONE ]

Adding Keystone manifest entries [ DONE ]

Adding Glance Keystone manifest entries [ DONE ]

Adding Glance manifest entries [ DONE ]

Adding Nova API manifest entries [ DONE ]

Adding Nova Keystone manifest entries [ DONE ]

Adding Nova Cert manifest entries [ DONE ]

Adding Nova Conductor manifest entries [ DONE ]

Creating ssh keys for Nova migration [ DONE ]

Gathering ssh host keys for Nova migration [ DONE ]

Adding Nova Compute manifest entries [ DONE ]

Adding Nova Scheduler manifest entries [ DONE ]

Adding Nova VNC Proxy manifest entries [ DONE ]

Adding OpenStack Network-related Nova manifest entries[ DONE ]

Adding Nova Common manifest entries [ DONE ]

Adding Neutron VPNaaS Agent manifest entries [ DONE ]

Adding Neutron FWaaS Agent manifest entries [ DONE ]

Adding Neutron LBaaS Agent manifest entries [ DONE ]

Adding Neutron API manifest entries [ DONE ]

Adding Neutron Keystone manifest entries [ DONE ]

Adding Neutron L3 manifest entries [ DONE ]

Adding Neutron L2 Agent manifest entries [ DONE ]

Adding Neutron DHCP Agent manifest entries [ DONE ]

Adding Neutron Metering Agent manifest entries [ DONE ]

Adding Neutron Metadata Agent manifest entries [ DONE ]

Adding Neutron SR-IOV Switch Agent manifest entries [ DONE ]

Checking if NetworkManager is enabled and running [ DONE ]

Adding OpenStack Client manifest entries [ DONE ]

Adding Horizon manifest entries [ DONE ]

Adding post install manifest entries [ DONE ]

Copying Puppet modules and manifests [ DONE ]

Applying 192.168.101.6_prescript.pp

Applying 192.168.101.7_prescript.pp

192.168.101.7_prescript.pp: [ DONE ]

192.168.101.6_prescript.pp: [ DONE ]

Applying 192.168.101.6_chrony.pp

Applying 192.168.101.7_chrony.pp

192.168.101.7_chrony.pp: [ DONE ]

192.168.101.6_chrony.pp: [ DONE ]

Applying 192.168.101.6_amqp.pp

Applying 192.168.101.6_mariadb.pp

192.168.101.6_amqp.pp: [ DONE ]

192.168.101.6_mariadb.pp: [ DONE ]

Applying 192.168.101.6_keystone.pp

Applying 192.168.101.6_glance.pp

192.168.101.6_keystone.pp: [ DONE ]

192.168.101.6_glance.pp: [ DONE ]

Applying 192.168.101.6_api_nova.pp

192.168.101.6_api_nova.pp: [ DONE ]

Applying 192.168.101.6_nova.pp

Applying 192.168.101.7_nova.pp

192.168.101.6_nova.pp: [ DONE ]

192.168.101.7_nova.pp: [ DONE ]

Applying 192.168.101.6_neutron.pp

Applying 192.168.101.7_neutron.pp

192.168.101.7_neutron.pp: [ DONE ]

192.168.101.6_neutron.pp: [ DONE ]

Applying 192.168.101.6_osclient.pp

Applying 192.168.101.6_horizon.pp

192.168.101.6_osclient.pp: [ DONE ]

192.168.101.6_horizon.pp: [ DONE ]

Applying 192.168.101.6_postscript.pp

Applying 192.168.101.7_postscript.pp

192.168.101.7_postscript.pp: [ DONE ]

192.168.101.6_postscript.pp: [ DONE ]

Applying Puppet manifests [ DONE ]

Finalizing [ DONE ]

**** Installation completed successfully ******

Additional information:

* File /root/keystonerc_admin has been created on OpenStack client host 192.168.101.6. To use the command line tools you need to source the file.

* To access the OpenStack Dashboard browse to http://192.168.101.6/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

* Because of the kernel update the host 192.168.101.6 requires reboot.

* The installation log file is available at: /var/tmp/packstack/20160517-184422-KxwSmh/openstack-setup.log

* The generated manifests are available at: /var/tmp/packstack/20160517-184422-KxwSmh/manifests

Reboot the controller

OpenStack Controller: Installing Nuage Plugin for Liberty

First, remove Neutron services from controller/network node osc01.

[root@osc01 ~]# systemctl stop neutron-dhcp-agent.service

[root@osc01 ~]# systemctl stop neutron-l3-agent.service

[root@osc01 ~]# systemctl stop neutron-metadata-agent.service

[root@osc01 ~]# systemctl stop neutron-openvswitch-agent.service

[root@osc01 ~]# systemctl stop neutron-netns-cleanup.service

[root@osc01 ~]# systemctl stop neutron-ovs-cleanup.service

[root@osc01 ~]# systemctl disable neutron-dhcp-agent.service

Removed symlink /etc/systemd/system/multi-user.target.wants/neutron-dhcp-agent.service.

[root@osc01 ~]# systemctl disable neutron-l3-agent.service

Removed symlink /etc/systemd/system/multi-user.target.wants/neutron-l3-agent.service.

[root@osc01 ~]# systemctl disable neutron-metadata-agent.service

Removed symlink /etc/systemd/system/multi-user.target.wants/neutron-metadata-agent.service.

[root@osc01 ~]# systemctl disable neutron-openvswitch-agent.service

Removed symlink /etc/systemd/system/multi-user.target.wants/neutron-openvswitch-agent.service.

[root@osc01 ~]# systemctl disable neutron-netns-cleanup.service

[root@osc01 ~]# systemctl disable neutron-ovs-cleanup.service

Removed symlink /etc/systemd/system/multi-user.target.wants/neutron-ovs-cleanup.service.

Get the rpm files for openstack liberty (el7) from Nokia’s support site (send me a comment if you need help on that).

[root@osc01 ~]# ls

answer.txt nuage-openstack-heat-5.0.0.1818-nuage.noarch.rpm

keystonerc_admin nuage-openstack-horizon-8.0.0.1818-nuage.noarch.rpm

nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm nuage-openstack-neutron-7.0.0.1818-nuage.noarch.rpm

nuagenetlib-2015.1.3.2.6_228-nuage.noarch.rpm nuage-openstack-neutronclient-3.1.0.1818-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuagenetlib-2015.1.3.2.6_228-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuage-openstack-neutron-7.0.0.1818-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuage-openstack-neutronclient-3.1.0.1818-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuage-openstack-horizon-8.0.0.1818-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuage-openstack-heat-5.0.0.1818-nuage.noarch.rpm

[root@osc01 ~]# rpm -i nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm

Configuring Nuage plugin

Modify neutron.conf file using token string from keystone.conf file:

[root@osc01 ~]# <b>mkdir /etc/neutron/plugins/nuage/</b>

[root@osc01 ~]# <b>vi /etc/neutron/plugins/nuage/nuage_plugin.ini</b>

[root@osc01 ~]# cat /etc/neutron/plugins/nuage/nuage_plugin.ini

[RESTPROXY]

default_net_partition_name = OpenStack_Lab

auth_resource = /me

server = 192.168.101.4:8443

organization = csp

serverauth = csproot:csproot

serverssl = True

base_uri = /nuage/api/v3_2

Now, Let’s modify /etc/nova/nova.conf. Change the following lines (have to be change in all compute and controller/network nodes):

use_forwarded_for = False

[neutron]

service_metadata_proxy = True

metadata_proxy_shared_secret=NuageNetworksSharedSecret

ovs_bridge=alubr0

security_group_api=neutron

Configuring Neutron

Edit/add the following lines to /etc/neutron/neutron.conf. Don’t forget to comment out “service_plugins = router”

core_plugin = neutron.plugins.nuage.plugin.NuagePlugin

Required installation tasks in PackStack Controller

More changes. copy “nuage-openstack-upgrade-1818.tar.gz” to packstack controller.

[root@osc01 ~]# mkdir /tmp/nuage

[root@osc01 ~]# mkdir /tmp/nuage/upgrade

[root@osc01 ~]# cd /tmp/nuage/upgrade

[root@osc01 upgrade]# mv /root/

.

[root@osc01 upgrade]# tar -xzf nuage-openstack-upgrade-1818.tar.gz

[root@osc01 upgrade]# python set_and_audit_cms.py --neutron-config-file /etc/neutron/neutron.conf --plugin-config-file /etc/neutron/plugins/nuage/nuage_plugin.ini

WARNING:oslo_config.cfg:Option "verbose" from group "DEFAULT" is deprecated for removal. Its value may be silently ignored in the future.

INFO:VPort_Sync:Starting Vports Sync.

WARNING:neutron.notifiers.nova:Authenticating to nova using nova_admin_* options is deprecated. This should be done using an auth plugin, like password

WARNING:oslo_config.cfg:Option "nova_region_name" from group "DEFAULT" is deprecated. Use option "region_name" from group "nova".

INFO:VPort_Sync:Vports Sync on VSD is now complete.

INFO:generate_cms_id:created CMS 031b436e-3181-4705-8285-e74816d9f5b9

WARNING:neutron.notifiers.nova:Authenticating to nova using nova_admin_* options is deprecated. This should be done using an auth plugin, like password

WARNING:oslo_config.cfg:Option "nova_region_name" from group "DEFAULT" is deprecated. Use option "region_name" from group "nova".

INFO:Upgrade_Logger:Audit begins.

INFO:Upgrade_Logger:Checking subnets.

INFO:Upgrade_Logger:Subnets done.

INFO:Upgrade_Logger:Checking domains.

INFO:Upgrade_Logger:Domains done.

INFO:Upgrade_Logger:Checking static routes.

INFO:Upgrade_Logger:Static routes done.

INFO:Upgrade_Logger:Checking acl entry templates.

INFO:Upgrade_Logger:Acl entry templates done.

INFO:Upgrade_Logger:Checking policy groups.

INFO:Upgrade_Logger:Policy groups done.

INFO:Upgrade_Logger:Checking floating ips.

INFO:Upgrade_Logger:Floating ips done.

INFO:Upgrade_Logger:Checking vports.

INFO:Upgrade_Logger:Vports done.

INFO:Upgrade_Logger:Checking shared network resources.

INFO:Upgrade_Logger:Shared network resources done.

INFO:Upgrade_Logger:Checking application domains.

INFO:Upgrade_Logger:Application domains done.

INFO:Upgrade_Logger:File "audit.yaml" created.

INFO:Upgrade_Logger:Audit Finished.

INFO:Upgrade_Logger:Processing CMS ID discrepancies in the audit file...

INFO:Upgrade_Logger:Processed all the CMS ID discrepancies in the audit file

[root@osc01 upgrade]# systemctl restart neutron-server

[root@osc01 upgrade]# cd

[root@osc01 ~]# . keystonerc_admin

[root@osc01 ~(keystone_admin)]# nova list

+----+------+--------+------------+-------------+----------+

| ID | Name | Status | Task State | Power State | Networks |

+----+------+--------+------------+-------------+----------+

+----+------+--------+------------+-------------+----------+

[root@osc01 ~]# systemctl restart neutron-server

[root@osc01 ~]# rm -rf /etc/neutron/plugin.ini

[root@osc01 ~]# ln -s /etc/neutron/plugins/nuage/nuage_plugin.ini /etc/neutron/plugin.ini

[root@osc01 ~]# neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/nuage/nuage_plugin.ini upgrade head

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Running upgrade for neutron ...

#

# Some boring lines

# More boring lines

#

INFO [alembic.runtime.migration] Running upgrade 1b4c6e320f79 -> 48153cb5f051, qos db changes

INFO [alembic.runtime.migration] Running upgrade 48153cb5f051 -> 9859ac9c136, quota_reservations

INFO [alembic.runtime.migration] Running upgrade 9859ac9c136 -> 34af2b5c5a59, Add dns_name to Port

OK

[root@osc01 ~]# systemctl restart openstack-nova-api

[root@osc01 ~]# systemctl restart openstack-nova-scheduler

[root@osc01 ~]# systemctl restart openstack-nova-conductor

[root@osc01 ~]# systemctl restart neutron-server

Just, let’s check if we have access to horizon (don’t login yet!).

Compute Node: Configuring nova.conf and installing VRS

It’s turn to make same changes to our compute node nova01.

[root@nova01 ~]# rpm -Uvh http://mirror.pnl.gov/epel/7/x86_64/e/epel-release-7-6.noarch.rpm

Retrieving http://mirror.pnl.gov/epel/7/x86_64/e/epel-release-7-6.noarch.rpm

warning: /var/tmp/rpm-tmp.VNThyF: Header V3 RSA/SHA256 Signature, key ID 352c64e5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:epel-release-7-6 ################################# [100%]

[root@nova01 ~]# vi /etc/yum.repos.d/CentOS-Base.repo

[root@nova01 ~]# yum -y update

Loaded plugins: fastestmirror

base | 3.6 kB 00:00:00

centosplus | 3.4 kB 00:00:00

epel/x86_64/metalink | 12 kB 00:00:00

epel | 4.3 kB 00:00:00

extras | 3.4 kB 00:00:00

updates | 3.4 kB 00:00:00

(1/4): centosplus/7/x86_64/primary_db | 2.3 MB 00:00:00

(2/4): epel/x86_64/updateinfo | 555 kB 00:00:01

(3/4): epel/x86_64/group_gz | 170 kB 00:00:01

(4/4): epel/x86_64/primary_db | 4.1 MB 00:00:00

Loading mirror speeds from cached hostfile

* base: mirror.rackspace.com

* centosplus: pubmirrors.dal.corespace.com

* epel: mirror.compevo.com

* extras: mirror.team-cymru.org

* updates: mirror.steadfast.net

Resolving Dependencies

#

# Boring lines

# more boring lines

#

Installed:

python2-boto.noarch 0:2.39.0-1.el7 python2-crypto.x86_64 0:2.6.1-9.el7 python2-ecdsa.noarch 0:0.13-4.el7 python2-msgpack.x86_64 0:0.4.7-3.el7

Dependency Installed:

libtomcrypt.x86_64 0:1.17-23.el7 libtommath.x86_64 0:0.42.0-4.el7 postgresql-libs.x86_64 0:9.2.15-1.el7_2 python2-rsa.noarch 0:3.4.1-1.el7

Updated:

hiera.noarch 1:1.3.4-5.el7 libndp.x86_64 0:1.2-6.el7_2 postfix.x86_64 2:2.10.1-6.0.1.el7.centos

python-contextlib2.noarch 0:0.5.1-1.el7 python-mimeparse.noarch 0:0.1.4-2.el7 python-perf.x86_64 0:3.10.0-327.18.2.el7.centos.plus

python-psutil.x86_64 0:2.2.1-1.el7 python-pygments.noarch 0:2.0.2-4.el7 python-qpid.noarch 0:0.32-13.el7

python-qpid-common.noarch 0:0.32-13.el7 python-requests.noarch 0:2.9.1-2.el7 python-unicodecsv.noarch 0:0.14.1-4.el7

python-unittest2.noarch 0:1.1.0-4.el7 python-urllib3.noarch 0:1.13.1-3.el7 python2-eventlet.noarch 0:0.18.4-1.el7

Replaced:

python-boto.noarch 0:2.25.0-2.el7.centos python-crypto.x86_64 0:2.6.1-1.el7.centos python-ecdsa.noarch 0:0.11-3.el7.centos

python-msgpack.x86_64 0:0.4.6-3.el7

Complete!

Nova/KVM: solving dependencies

Solve some dependencies in KVM.

[root@nova01 ~]# yum install libvirt -y

#

# Boring lines

#

Installed:

libvirt.x86_64 0:1.2.17-13.el7_2.4

Dependency Installed:

libvirt-daemon-config-network.x86_64 0:1.2.17-13.el7_2.4 libvirt-daemon-driver-lxc.x86_64 0:1.2.17-13.el7_2.4

Complete!

[root@nova01 ~]# yum install python-twisted-core -y

#

# Boring lines

#

Installed:

python-twisted.x86_64 0:15.4.0-3.el7

Dependency Installed:

libXft.x86_64 0:2.3.2-2.el7 libXrender.x86_64 0:0.9.8-2.1.el7 pyserial.noarch 0:2.6-5.el7

python-characteristic.noarch 0:14.3.0-4.el7 python-service-identity.noarch 0:14.0.0-4.el7 python-zope-interface.x86_64 0:4.0.5-4.el7

python2-pyasn1-modules.noarch 0:0.1.9-6.el7.1 tcl.x86_64 1:8.5.13-8.el7 tix.x86_64 1:8.4.3-12.el7

tk.x86_64 1:8.5.13-6.el7 tkinter.x86_64 0:2.7.5-34.el7

Complete!

[root@nova01 ~]# yum install perl-JSON -y

#

# Boring lines

#

Installed:

perl-JSON.noarch 0:2.59-2.el7

Complete!

[root@nova01 ~]# yum install vconfig -y

#

# Boring lines

#

Installed:

vconfig.x86_64 0:1.9-16.el7

Complete!

Configure Metadata agent in PackStack controller

Delete current file nuage-metadata-agent and create a new file with the following information:

[root@nova01 ~]# vi /etc/nova/nova.conf

[root@nova01 ~]# rm -rf /etc/default/nuage-metadata-agent

[root@nova01 ~]# vi /etc/default/nuage-metadata-agent

[centos@nova01 ~]$ cat /etc/default/nuage-metadata-agent

METADATA_PORT=9697

NOVA_METADATA_IP=127.0.0.1

NOVA_METADATA_PORT=8775

METADATA_PROXY_SHARED_SECRET="NuageNetworksSharedSecret"

NOVA_CLIENT_VERSION=2

NOVA_OS_USERNAME=nova

NOVA_OS_PASSWORD=2b12874fcf3c43ff

NOVA_OS_TENANT_NAME=services

NOVA_OS_AUTH_URL=http://192.168.101.6:5000/v2.0

NOVA_REGION_NAME=RegionOne

NUAGE_METADATA_AGENT_START_WITH_OVS=true

NOVA_API_ENDPOINT_TYPE=publicURL

Installing Nuage VRS

We’ll install VRS into the nova node and replace OVS instance.

[root@nova01 ~]# cd /tmp/nuage/

[root@nova01 nuage]# mv /root/nuage-openvswitch-* .

[root@nova01 nuage]# yum -y remove openvswitch

#

# Some boring lines

# More boring lines

#

Removed:

openvswitch.x86_64 0:2.4.0-1.el7

Dependency Removed:

openstack-neutron-openvswitch.noarch 1:7.0.4-1.el7

Complete!

[root@nova01 nuage]# yum -y remove python-openvswitch

#

# Some boring lines

# More boring lines

#

Removed:

python-openvswitch.noarch 0:2.4.0-1.el7

Complete!

[root@nova01 nuage]# yum -y install nuage-openvswitch-3.2.6-232.el7.x86_64.rpm

#

# Some boring lines

# More boring lines

#

Installed:

nuage-openvswitch.x86_64 0:3.2.6-232.el7

Dependency Installed:

perl-Sys-Syslog.x86_64 0:0.33-3.el7 protobuf-c.x86_64 0:1.0.2-2.el7 python-setproctitle.x86_64 0:1.1.6-5.el7

Complete!

[root@nova01 nuage]# vi /etc/default/openvswitch

[root@nova01 nuage]# cat /etc/default/openvswitch | grep 101.5

ACTIVE_CONTROLLER=192.168.101.5

[root@nova01 nuage]# mv /root/nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm .

[root@nova01 nuage]# rpm -i nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm

[root@nova01 ~]# cd /tmp/nuage/

[root@nova01 nuage]# mv /root/nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm .

[root@nova01 nuage]# rpm -i nuage-metadata-agent-3.2.6-232.el7.x86_64.rpm

[root@nova01 nuage]# vi /etc/nova/nova.conf

Configure nova.conf

We’ll modify /etc/nova/nova.conf as following:

ovs_bridge=alubr0

Restart services as following:

[root@nova01 nuage]# systemctl restart openstack-nova-compute

[root@nova01 nuage]# systemctl restart openvswitch

Checking service status and connections.

[root@nova01 ~]# systemctl status openvswitch

● openvswitch.service - Nuage Openvswitch

Loaded: loaded (/usr/lib/systemd/system/openvswitch.service; enabled; vendor preset: disabled)

Active: active (exited) since Mon 2016-05-23 12:26:19 CDT; 9h ago

Main PID: 508 (code=exited, status=0/SUCCESS)

CGroup: /system.slice/openvswitch.service

├─ 601 ovsdb-server: monitoring pid 602 (healthy)

├─ 602 ovsdb-server /etc/openvswitch/conf.db -vconsole:emer -vsyslog:err -vfile:warn --remote=punix:/var/run/openvswitch/db.sock --private-key=db:O...

├─ 694 ovs-vswitchd: monitoring pid 695 (healthy)

├─ 695 ovs-vswitchd unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:warn --mlockall --no-chdir --log-file=/var/log/openvswitch...

├─1069 nuage-SysMon: monitoring pid 1070 healthy

├─1070 /usr/bin/python /sbin/nuage-SysMon -vany:console:emer -vany:syslog:err -vany:file:info --no-chdir --log-file=/var/log/openvswitch/nuage-SysM...

├─1121 monitor(vm-monitor): vm-monitor: monitoring pid 1122 (healthy)

├─1122 vm-monitor --no-chdir --log-file=/var/log/openvswitch/vm-monitor.log --pidfile=/var/run/openvswitch/vm-monitor.pid --detach --monitor

├─1144 nuage-rpc: monitoring pid 1145 (healthy)

└─1145 nuage-rpc unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:info --tcp 7406 --ssl 7407 --no-chdir --log-file=/var/log/ope...

May 23 12:26:13 nova01.novalocal openvswitch.init[508]: iptables: No chain/target/match by that name.

May 23 12:26:13 nova01.novalocal openvswitch.init[508]: iptables: No chain/target/match by that name.

May 23 12:26:13 nova01.novalocal openvswitch.init[508]: iptables: Bad rule (does a matching rule exist in that chain?).

May 23 12:26:16 nova01.novalocal openvswitch.init[508]: Starting nuage system monitor:Starting nuage-SysMon[ OK ]

May 23 12:26:19 nova01.novalocal openvswitch.init[508]: Starting vm-monitor:Starting vm-monitor:Starting vm-monitor[ OK ]

May 23 12:26:19 nova01.novalocal openvswitch.init[508]: Starting nuage rpc server:Starting nuage-rpc[ OK ]

May 23 12:26:19 nova01.novalocal systemd[1]: Started Nuage Openvswitch.

May 23 12:26:20 nova01.novalocal ovs-vsctl[1154]: ovs|00001|vsctl|INFO|Called as ovs-vsctl --no-wait --timeout=5 set Open_vSwitch . other_config:acl-...-port=514

May 23 12:26:22 nova01.novalocal ovs-vsctl[1185]: ovs|00001|vsctl|INFO|Called as ovs-vsctl --no-wait --timeout=5 set Open_vSwitch . other_config:stat...1.4:39090

May 23 12:29:24 nova01 systemd[1]: [/usr/lib/systemd/system/openvswitch.service:10] Unknown lvalue 'ExecRestart' in section 'Service'

Hint: Some lines were ellipsized, use -l to show in full.

[root@nova01 ~]# ovs-vsctl show

2df2c5a3-5f96-4186-bf54-4836d73e3b39

Bridge "alubr0"

Controller "ctrl1"

target: "tcp:192.168.101.5:6633"

role: master

is_connected: true

Port "svc-rl-tap1"

Interface "svc-rl-tap1"

Port "svc-rl-tap2"

Interface "svc-rl-tap2"

Port svc-pat-tap

Interface svc-pat-tap

type: internal

Port "alubr0"

Interface "alubr0"

type: internal

Bridge br-tun

fail_mode: secure

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "vxlan-c0a86506"

Interface "vxlan-c0a86506"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="192.168.101.7", out_key=flow, remote_ip="192.168.101.6"}

Bridge br-int

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

ovs_version: "3.2.6-232-nuage"

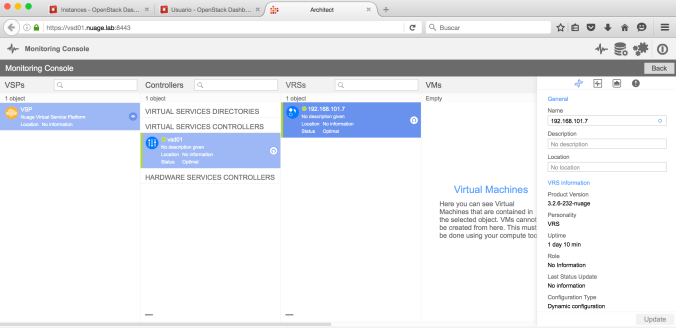

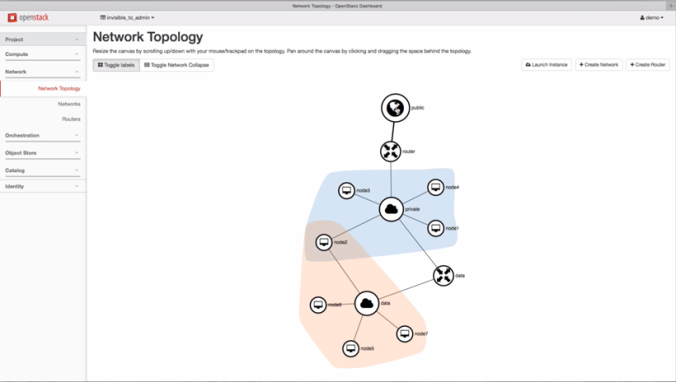

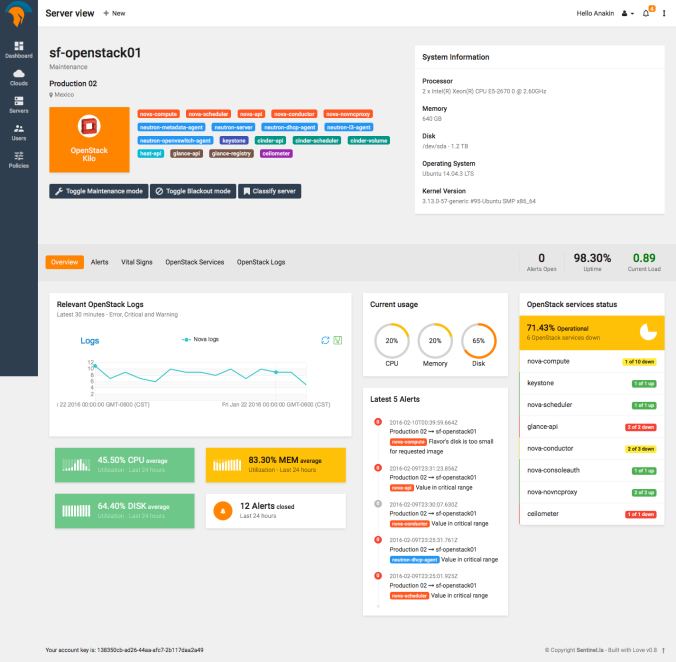

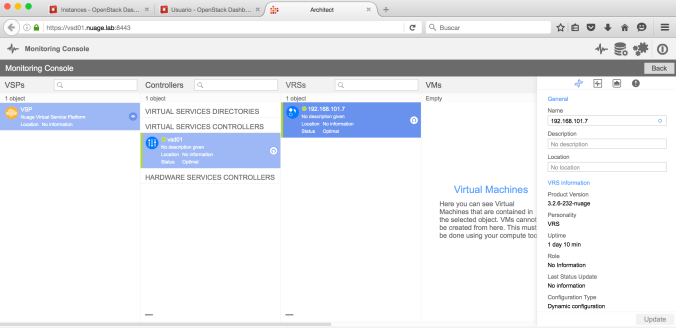

The next image will show you what you will get into the console

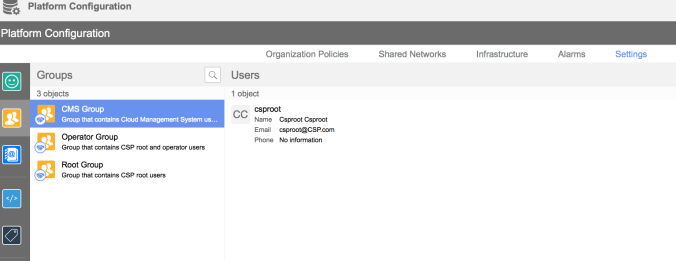

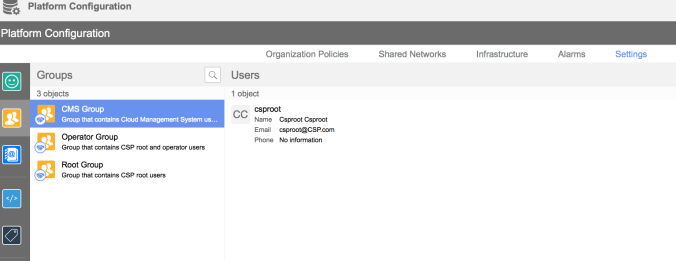

Last Step: Add csproot user to CMS Group as the following

And we are done with our lab. Thanks very much for reading!

see you.